Improving the Accuracy and Performance of ML Algorithms with a Bagging (Bootstrap Aggregation) Classifier

Intro

Machine Learning models such as Decision Trees, can be prone to overfitting on the training set which can lead to wrong predictions on new data. Bootstrap Aggregation (bagging) is a ensembling method that attempts to resolve overfitting for classification or regression problems. Bagging aims to improve the accuracy and performance of machine learning algorithms. It does this by taking random subsets of an original dataset, with replacement, and fits either a classifier (for classification) or regressor (for regression) to each subset. The predictions for each subset are then aggregated through majority vote for classification or averaging for regression, increasing prediction accuracy.

Evaluating the Base Classifier

To see how bagging can improve model performance, we must start by evaluating how the base classifier performs on the dataset. We will be looking to identify different classes of wines found in Sklearn’s wine dataset. The parameter as_frame is set equal to True so we do not lose the feature names when loading the data. We can predict the class of wine the unseen test set and evaluate the model performance.

1 | from sklearn import datasets |

1 | Train data accuracy: 1.0 |

The base classifier performs reasonably well on the dataset achieving 82% accuracy on the test dataset with the current parameters (Different results may occur if you do not have the random_state parameter set). Now that we have a baseline accuracy for the test dataset, we can see how the Bagging Classifier out performs a single Decision Tree Classifier.

Creating a Bagging Classifier

For bagging we need to set the parameter n_estimators, this is the number of base classifiers that our model is going to aggregate together. For this sample dataset the number of estimators is relatively low, it is often the case that much larger ranges are explored. Hyperparameter tuning is usually done with a grid search, but for now we will use a select set of values for the number of estimators. We start by importing the necessary model. To see how the Bagging Classifier performs with differing values of n_estimators we need a way to iterate over the range of values and store the results from each ensemble. The default parameter for the base classifier in BaggingClassifier is the DicisionTreeClassifier therefore we do not need to set it when instantiating the bagging model. With the models and scores stored, we can evaluate and visualize the BaggingClassifier performance.

1 | estimator_range = [2,4,6,8,10,12,14,16] |

By iterating through different values for the number of estimators we can see an increase in model performance from 82.2% to 95.5%. After 14 estimators the accuracy begins to drop, again if you set a different random_state the values you see will vary. That is why it is best practice to use cross validation to ensure stable results. In this case, we see a 13.3% increase in accuracy when it comes to identifying the type of the wine.

Another Form of Evaluation

As bootstrapping chooses random subsets of observations to create classifiers, there are observations that are left out in the selection process. These “out-of-bag” observations can then be used to evaluate the model, similarly to that of a test set. Keep in mind, that out-of-bag estimation can overestimate error in binary classification problems and should only be used as a compliment to other metrics. We saw in the last example that 12 estimators yielded the highest accuracy, so we will use that to create our model. This time setting the parameter oob_score to true to evaluate the model with out-of-bag score.

1 | oob_model = BaggingClassifier(n_estimators = 12, oob_score = True,random_state = 22) |

1 | Out-of-bag test data accuracy: 0.9555555555555556 |

Since the samples used in OOB and the test set are different, and the dataset is relatively small, there is a difference in the accuracy score (93.98%). It is rare that they would be exactly the same, again OOB should be used quick means for estimating error, but is not the only evaluation metric. The bagging classifier performs pretty well on the dataset achieving 95.5% accuracy on the test dataset with 12 estimators.

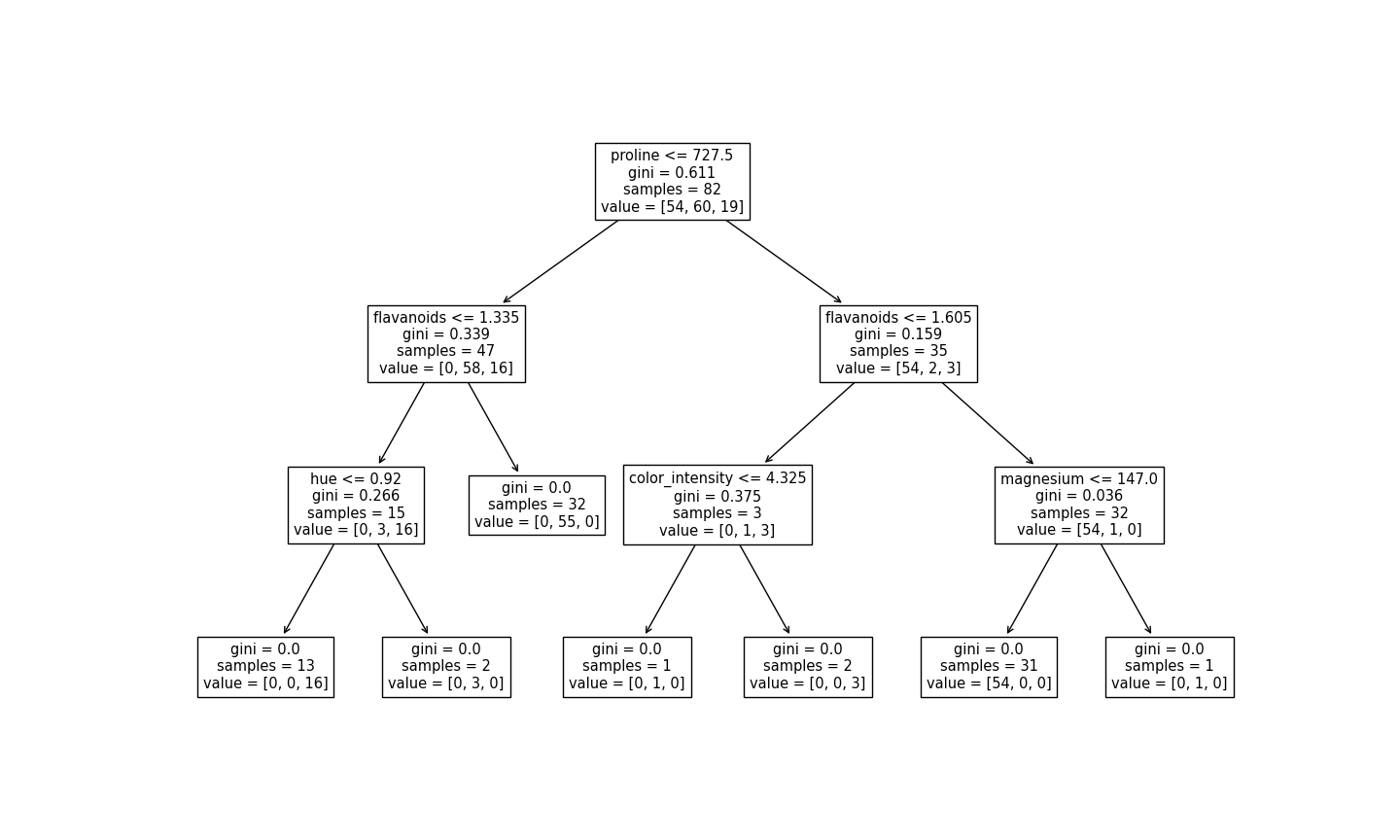

Generating Decision Trees

It is possible to see the individual decision trees that went into the aggregated classifier. This helps us to gain a more intuitive understanding on how the bagging model arrives at its predictions. However, this is only functional with smaller datasets, where the trees are relatively shallow and narrow making them easy to visualize. The different trees can be graphed by changing the estimator you wish to visualize.

1 | clf2 = BaggingClassifier(n_estimators = 12, oob_score = True,random_state = 22) |

Here we can see just the first decision tree that was used to vote on the final prediction. Again, by changing the index of the classifier you can see each of the trees that have been aggregated.

Bagging Classifier, Bootstrap Aggregation, Machine Learning — Jun 18, 2021

Search

Made with ❤️ and ☀️ on Earth.